Note: This post is aimed at folks already knee-deep in MCP and AI infrastructure. If you’re new to MCP, you may want to start with a high-level overview first and then come back here for the details.

If you’ve ever built or supported a healthcare integration, you know the work goes far beyond connecting endpoints. It’s orchestration, mapping, validation, routing, troubleshooting, and the constant vigilance required to keep data flowing safely. It’s powerful but it’s also brittle, repetitive, and deeply manual.

We’re moving toward a world where interoperability is shaped, monitored, and repaired by AI agents that understand intent and can act on it. MCP is the foundation of that shift. By giving AI systems a stable, enforceable set of actions—typed tools they can safely call—MCP turns LLMs from observers into agents that can configure, monitor, and eventually heal the interoperability fabric itself.

Practically, this means shifting interoperability from static configuration toward programmable infrastructure. Intent becomes a first-class input, and integration building blocks are exposed as structured tools, so AI can safely participate in design, deployment, and operations rather than just consuming outputs.

In 2026, Redox will be incorporating AI across our tech stack, tooling, customer features, and offerings. We’re leaning into MCP because it gives us a disciplined way to expose our platform as a set of typed, discoverable capabilities for agents, while keeping the hard problems (auth, tenancy, routing, safety, observability) inside the infrastructure where they belong.

Redox sits at the point where healthcare data first takes shape as it moves between systems—where messages are normalized, transformed, validated, and enriched. By adding MCP on top of our Platform API, we’re turning that interoperability fabric into tools and data sources that AI systems can call in a principled, repeatable way.

Previous blog posts dive into why healthcare needs an AI-ready data and interoperability foundation that doesn’t just move messages, but understands and improves them. In this post, we’ll go a level deeper and walk through two concrete ways we’re using MCP at Redox:

- Turning configuration into a conversational, intent-driven workflow

- Applying the same pattern to observability and data health

Use Case 1: Use MCP to Stand Up Integrations

Our MCP server sits directly on top of the Redox Platform API. Its purpose is simple: Let teams describe what they want to achieve in natural language and then safely execute the required steps.

Natural language becomes the interface; MCP is the contract. People describe what they want in plain language, and the system handles how it gets implemented. The result is less time in config screens and docs, fewer handoffs between teams, and far less bespoke glue code.

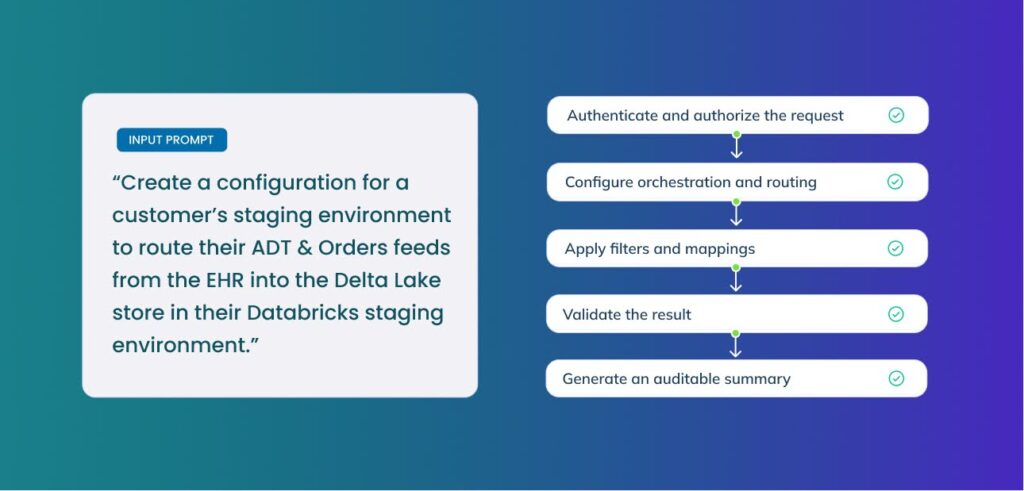

For example, instead of writing low-level configuration, an input might say:

“Create a configuration for this customer’s staging environment to route their ADT + Orders feeds from the EHR into the Delta Lake store in their Databricks staging environment.”

From that instruction, the MCP can kick off the right tasks:

- Authenticate and authorize the request

- Configure orchestration and routing

- Apply filters and mappings

- Validate the result

- Generate an auditable summary of what changed

Figure 1. From Intent to Action with MCP

This example shows how a single natural language input triggers the corresponding MCP workflow—authenticating the request, configuring routing, applying mappings, validating results, and generating an auditable summary.

What used to be a sequence of manual changes becomes a repeatable flow. The intent lives in natural language; MCP turns that into concrete API calls, validation steps, and an audit trail. Teams spend more time on edge cases and higher-order design decisions, and less on wiring up near-identical configurations over and over.

Use Case 2: Use MCP to Monitor and Troubleshoot Data in Production

Standing up integrations is only half the story. An AI-ready interoperability foundation also needs to understand and explain what’s happening in production.

We’re applying the same MCP-based approach to observability. Instead of relying solely on dashboards, logs, and ad hoc queries, we expose observability as a set of tools that agents (or humans, via natural language) can call. That shifts much of the “figure out what’s going on” work from humans manually querying systems to agents that can investigate issues on demand and at scale.

That means you can ask the system questions like:

- “Show me inconsistencies between source and destination data for this customer over the last 24 hours.”

- “Detect schema drift for Orders messages from this EHR since last week.”

- “Summarize the top three reasons messages are failing for this workflow and suggest remediations.”

Under the hood, those requests map to concrete actions:

- Identifying inconsistencies between source and destination payloads

- Detecting schema drift as EHRs and downstream systems evolve

- Spotting patterns in message failures, retries, and latency

- Attributing clear root causes for why something regressed

- Automating resolution for recurring, well-understood issues

- Powering complex searches across messages, metrics, and traces using natural language

By pairing MCP with deep observability, we’re turning interoperability operations into a closed-loop system: the same layer that stands up a configuration also watches it in real time, explains what’s going on, and helps correct issues quickly. Incidents take less time to understand, routine issues can be auto-remediated, and teams spend more time shaping the system and less time firefighting.

The Outcome: More Reliable Data for Healthcare AI

Reliable, consistent clinical data is the backbone of the many AI models already operating in real-world healthcare today. Some examples we’ve heard include hospitals using AI to flag early signs of sepsis from streaming vitals, prioritize MRI scans that may show a stroke, and predict which patients are most likely to be readmitted so care teams can intervene sooner. Care managers lean on AI to surface gaps in chronic disease management, while front-line clinicians use summarization tools to cut through chart clutter and focus on what matters for the next decision.

All of these use cases depend on high-quality data flowing in real time from many systems. As the number of deployed AI solutions rapidly increases, a foundational data infrastructure that can configure systems by intent and continuously monitor live data flows becomes essential. If a single blood pressure feed drops, a problem list isn’t normalized, or timestamps drift out of sync, model outputs—and ultimately patient care—can suffer.

That’s the vision behind our MCP work at Redox: making interoperability feel more natural for developers and clinicians, while quietly doing the hard, unglamorous work required to keep healthcare data trustworthy for the AI era.

Get Early Access to the Redox MCP Server (BETA)

We’re already using the Redox MCP server internally and are gearing up to roll it out to a select group of beta customers in early 2026. Want to be one of the first to try it out? Let your Redox account team know you’re interested in joining the beta program and come experiment with us.

This post was co-written by Sasi Mukkamala, Redox’s Chief Technology Officer and Rachel Witalec, Redox’s Chief Product Officer.